Part 3: Evaluation Criteria - "Is it good enough FOR WHAT"

(This is the third post in the series on architecture review techniques. Check out Part 1 and Part 2 if you haven’t done so already)

Evaluation criteria - you should definitely have someSeriously. That’s not just a throwaway line to kick off a blog post. You’ve probably also been in situations where someone has asked you, «Can you check out this architecture and tell me if its ok?». Sure, you can usually come up with some feedback based on experience, but mostly you want to reply with, «Can I tell you if it’s ok FOR WHAT? What's important for you? Is it security, performance, maintainability....?». You need to work on your evaluation criteria if you want to deliver a useful architecture review.

There are plenty of techniques for capturing evaluation criteria. Such as:

- experience

- scenarios

- checklists

- design principles

- architecture smells

- anti-patterns

- metrics

This post is a bit long but working with evaluation criteria can be the most important part of a review, so I think it's worth it. As an additional benefit, working with quality attributes, which are the basis for evaluation criteria, is a tool that will give you testable design criteria that you can use to drive an architecture design process and not just review an architecture.

But first a word on experience. You can come a long way by relying solely on experience to perform the review - especially if you actually have both the technical and domain experience that you think you do. But you will get more out of an architecture review if you take a the time to systematically work through your evaluation criteria first.

tl;dr summary:

- determine which qualities (or quality attributes) are most important for the system to deliver - eg: performance, maintainability, usability, etc

- use checklists, generic requirements and technical requirements for criteria that are relevant across solutions and projects

- elicit verifiable scenarios that operationalise the generic requirements and to exemplify qualities that are specific to your solution

- relying on experience can work if you have deep domain knowledge in addition to software architecture expertise.

System Qualities

«...The architecture is the primary carrier of system qualities, such as performance, modifiability, and security, none of which can be achieved without a unifying architectural vision. Architecture is an artifact for early analysis to make sure that the design approach will yield an acceptable system. Architecture holds the key to postdeployment system understanding, maintenance, and mining efforts…»Evaluation criteria start with the system qualities. Typically these have been called non-functional requirements but more and more people are using the term “quality attributes” and “quality requirements” instead. Ideally, whoever wants the system reviewed would have a list of system qualities that they want the solution to achieve - but very few people have them at a level that can be used for a review. Often there is a document somewhere in the organisation called "Non functional requirements (NFR)" that lists a mix generic design goals, developer guidelines and specific test criteria and constraints that are applicable across all projects. For example, the following are taken from some real non-functional requirements documents:

SEI definition

- “The solution must be flexible enough to evolve as the business requires”

- “Response times for customer-facing webapps must be < 2secs for 95% of all requests”

- “Always use the prescribed logging package and not System.out…”

In addition there can be solution-specific quality attributes that are important for your system, and these need to be verified with their own quality requirements that are probably not mentioned in the generic list of NFRs. Consider a boring document archive system that you need to have for compliance reasons. It is most likely a long-lived, non-innovative solution where sustainability is a very important quality attribute - that is, how can the system be maintained over a 10+ year period. Sustainability is a quality attribute that is not usually mentioned in a common set of requirements but it could be quite important to some specific solutions.

Extracting the total set of requirements for the qualities that you want your system to have, involves both the identification of those requirements that are solution-specific and the operationalisation of those generic common requirements within the context of the system under review.

An important step in an architecture review is to elicit these criteria and one of the most useful results from an architecture review can simply be to get architects and product owners more proactive in eliciting, refining, maintaining, and testing these qualities in the design evolution of the solution.

Identifying and prioritising system qualities

Start by determining which qualities are relevant for your system. There are many sets of -illities you can use, but it can be useful to ones that are provided in the ISO 25010 software quality model standard and then customise them as needed for your particular project.As an example of customisation, perhaps the Usability aspects aren’t relevant for your back-end project; or you need to extend the refinements for Security to include Authentication, Defense-in-depth, etc; or you need an additional quality which isn’t depicted in the model - for instance issues around DevOps or legal Compliance. Wikipedia has quite a comprehensive list of system qualities that you can use to customise the above list.

You might identify the important qualities and refinements simply from the business drivers and scope of the review or perhaps you will need to evolve the tree as part of scenario workshops, which I’ll describe later in this blog.

Most of these qualities are well understood, except perhaps those under “functional suitability”. You may assume that all these are covered under testing. However, I once worked on a project to automate the processing of social security applications. It was only in a scenario workshop with case-handlers that we learned the importance and consequence of ensuring that the system was completely correct with respect to the relevant legislation. For instance, if the case was sent to an appeals court then the govt agency needed to show exactly how the automatic processing occurred. This required two changes: a) extensions to the domain model to record far more information about rule execution so that processing could be recreated at a later date; and b) a change from user stories and acceptance criteria for functionality directly connected to legal compliance to a more detailed technique - RuleSpeak - that removed ambiguity and interpretation by developers.

The SEI uses a Quality Utility Tree, which can be cumbersome to use in practice, but you can represent them in multiple different ways. For instance my colleague Mario uses an impact map, and here’s some other examples from Arnon Rotem-Gal-Oz blog series on system qualities:

- Blog series.

- Nice summary on stackexchange.

- And an example tree.

- To get you thinking about the evaluation criteria you need use in an evaluation. Even if you are going to evaluate based on experience, it’s useful to work through all qualities that are important for the solution owner in order to minimise the chance that you overlook something important.

- Have a representation of system quality that you can discuss with product owners, domain experts, etc who will help identify, prioritise, and refine testable evaluation criteria. It's not often these stakeholders consider issues beyond functional user stories and a visual representation can help drive workshops to elicit evaluation criteria. It also helps get them to take more ownership of these quality issues - especially when they have to vote on priority and there are tradeoffs that need to be made.

Evaluation criteria based on system qualities

Once you have the important quality attributes for the solution then you can exemplify them with testable evaluation criteria. These usually takes the form of:- scenarios or

- checklists

Scenarios

Scenarios are the most often recommended approach for capturing evaluation criteria. For each quality attribute you work with relevant stakeholders in the project to capture specific, quantified scenarios that you can use as acceptance test cases for the architecture. For instance, you can see example scenarios in a quality utility tree in following image.These scenarios need to be verifiable. One technique is to use SMART scenarios - specific, measurable, actionable, realistic, and timebound. Another format is to specify Context, Stimulus, and Response from the system. These scenarios are like acceptance test cases for the architecture and the Context, Stimulus, Response format is very similar to the Given, When, Then format used in BDD/Specification By Example that you may be already familiar with.

- Given (Context) normal conditions

- When (Stimulus) a write operation on an entity occurs

- Then the (Response) from the system should be less than 500 milliseconds.

A useful tool for generating, refining, and prioritising these scenarios is the Quality Attribute Workshop. The workshop gathers relevant stakeholders and works through the following steps:

- identify the important qualities attributes and refinements (the architecture drivers)

- brainstorming of scenarios with broad selection of stakeholders. At this stage those scenarios can be quite high level and as simple as a bit of prose on some post-it notes.

- scenarios are then grouped together as appropriate and prioritised using voting from those present

- the highest priority scenarios are then refined using the “Context, Stimulus, Response” format and quantified.

- finally, those scenarios are classified for (Benefit, Difficulty to Realise) using a High, Middle, and Low rating.

These techniques for generating scenarios are especially helpful when running an architecture review in an domain in which you are not an expert - for example if you are called in as an external reviewer. Additionally, they can be useful when one of the objectives is to improve understanding of the system drivers to a larger group.

It may not always be useful, or necessary, to spend time generating scenarios. Creating scenarios with stakeholders is time consuming and it can be challenging to get them refined and quantified. An IEEE Software article from 2008 includes a debate between Tom Gilb and Alistair Cockburn on the relative merits of quantifying these scenarios and the return on investment for the amount of work you put in. Unfortunately, the debate is behind the IEEE paywall, but you can read Alistair’s thoughts from his website and Tom’s writings on the benefits of requirements quantification are well detailed. Additionally, you may not have time to run these workshops within the time constraints of the review, or perhaps the scope of the review doesn’t require detailed scenarios. But being able to elicit and detail evaluation criteria using scenarios is a useful technique to have.

Checklists

Checklists are probably the most used technique in practice for capturing evaluation criteria. Whilst scenarios are good for capturing project and domain specific evaluation criteria, checklists are good for capturing the technical architecture issue that exist across multiple projects. For example, I have a checklist that deals solely with integration architecture that I use on most projects.There’s not much to say about using checklists. Simply create and maintain checklists for the quality issues that exist across all projects and use them in your reviews. The answers to this question on stackexchange provide a great collection of checklists. For instance, this one from Code Complete:

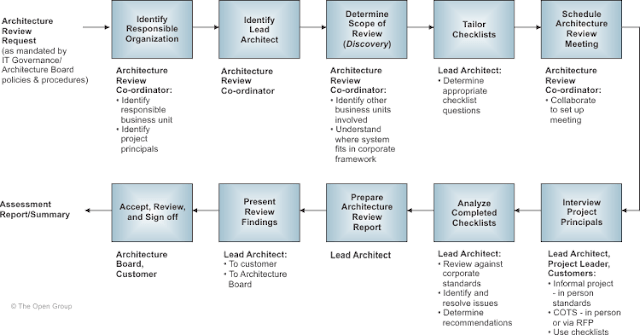

Additionally, TOGAF recommends the whole architecture review process be designed around checklists.

The TOGAF architecture compliance review process is not as detailed as the ones I’ll get to in later posts, but the TOGAF guide provides a useful set of checklists for areas such as:

- Hardware and Operating System Checklist

- Software Services and Middleware Checklist

- Applications Checklists

- Information Management Checklists

- Security Checklist

- System Management Checklist

- System Engineering/Overall Architecture Checklists

- System Engineering/Methods & Tools Checklist

Other sources for checklists include:

- These from microsoft (one, two, and three).

- Checklist for conceptual architecture.

- and this one for architecture design documents.

Summary

In this post of the blog series we’ve looked at evaluation criteria which are one of the most important parts of the Review Inputs (remember Review Inputs from the SARA report that we looked at in the last post?)

Let’s wrap it up with a rewording of the original tl;dr summary:

- determine which qualities (or quality attributes) are most important for the system to deliver - eg: performance, maintainability, and usability. These are the architecture drivers.

- use checklists, generic requirements and technical requirements/constraints for criteria that are relevant across solutions and projects.

- elicit verifiable scenarios that operationalise the generic requirements and to exemplify qualities that are specific to your solution.

- use workshops with stakeholders (where appropriate) to both make use of their extensive domain knowledge and to get them to take ownership of system quality in addition to functional user stories.

- relying on experience can work if you have deep domain knowledge in addition to software architecture expertise.

The next post in the series will move on to next major area in architecture reviews - Methods and Techniques.

Thanks to @marioaparicio for comments on a draft of this article.

1 comments:

I am looking for some good blog sites for studying. I was searching over search engines and found your blog site.

ReplySoftware Services in Indore

Post a Comment